Why Neural Networks is a Bad Technology

Stop pretending that it deals with truth values, and it could be a decent tech.

There is a big difference between pattern matching and determining truth values.

I’ll show some examples how.

Let's say we attach the mathematical values of a certain signal in a network to a text label.

It doesn't mean that the signal "is" or "mean" that text label. It only indicates the arbitrary relationship between the signal matching and the label that it just happened to be attached to:

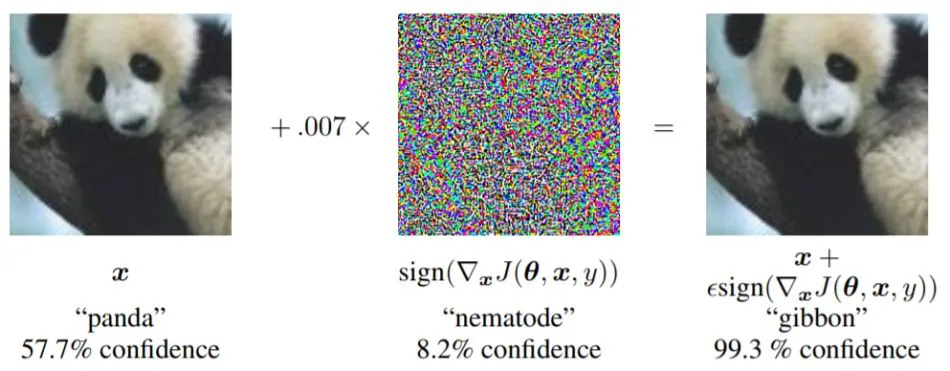

In the above example (no, it’s not overfitting), we can manipulate the signal by inserting pixels invisible to the human eye so it could match any label. The human eyes can't even tell the difference between the pictures. If we’re identifying what the picture is actually representing, then there SHOULDN’T BE A DIFFERENCE between what the picture “is” before, or after the change… But the machine identifies a huge difference: The difference between a panda and a gibbon.

A more practical example I've found myself, is to ask any image generator to draw an ouroboros. Tell a generator to give an image of one. Then look up the dictionary on what an "ouroboros" actually is. Question: does it actually ever give you one, or does it give you anything BUT one?

The prediction of what the NEXT PIXEL in a picture should be, irrespective of what a picture "is representing," becomes a problem. Same goes with predicting the NEXT CHARACTER that goes into a string in an LLM such as any GPT.

Neural networks never deal with knowledge in any kind of legitimate fashion. It will always have epistemic issues because that's their underlying nature.

Additionally, it's a house of cards that's brittle and collapses if anything is out-of-range of the model’s data. The actual "range" being the entire real world and the entirety of human knowledge.

Remember what happened in the fatal accident which decapitated a Tesla driver. That was an "out of bounds" condition, or perhaps to be euphemistically called an “edge-case” where anything that’s unexpected or unaccounted for becomes an “edge-case.”

Same thing happened when a Cruise robotaxi dragged someone across a pavement 20 feet.

If people are depending on these NNs for knowledge, facts, life-or-death mission-critical things... Would you bet on these things being right-by-chance without actually dealing with truth values?

Equating these 2 things commits a big category mistake:

Chances of being correct/incorrect due to knowledge being correct/incorrect via limited human experience

Chances of being correct/incorrect due to stochastic mechanisms in a machine

C'mon. Naive school kids mix that up. “Oh, we’ll just get more data into the set” WON’T FIX ANYTHING.

Of course people aren't going to put glue on their pizza (Google shut that down, I hope? Note how Google called it an “edge-case”) but what about when providers give people access to "telehealth" based on LLMs or even go as far as directly relying on them at their facilities? Using LLMs for medical diagnosis can lead to deadly consequences.

There are fundamental epistemic flaws with neural networks that could not be remedied with the way they are, and companies are pushing hard to just put them absolutely everywhere. Do they care about people...? Of course not. ☠️

very well said. These is the so called OOD (Out Of Distribution data) that confuses neural networks when to make a decision with it. However, real world data is exactly that: data OOD. Neural networks produce shortcuts and spurious correlations, they don't produce causal relations or explanations because they do not have knowledge and don't understand context!

The whole purpose of the cognitive system is to deal with information outside of the system. Read. You are very ignorant, unfortunately, and read who Daniel Dennett was. That would be the first thing. "He called me Daniel Dennett Junior." I wonder who is Daniel Dennett? Let me look that up. You cannot be spoon fed everything in life. Your ignorance is on total display for the world to see....