LLMs and Generative AI don't deal with concepts

All you have to do is tell it to draw an ouroboros

LLMs and generative AI programs don’t deal with concepts. They match patterns but don’t actually deal with referents. In the case of generating pictures, they predict pixels based on all these other fields of label-attached pixels but don’t involve themselves in what those groups of pixels actually represent. They deal with patterns, and not what those patterns ARE.

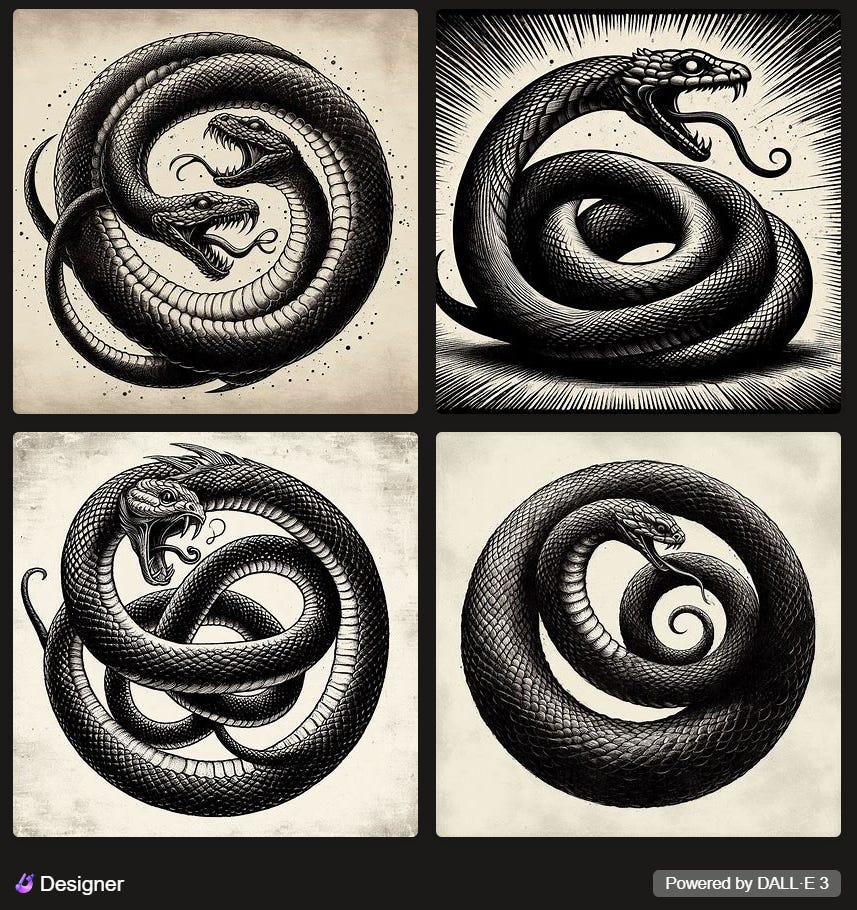

Here’s a simple exercise: Tell a generative AI bot to draw an ouroboros. That’s it. Let’s see how it deals with such a request.

But first, let’s get a precise idea of what an ouroboros is supposed to be. According to Merriam-Webster, it’s:

a circular symbol that depicts a snake or dragon devouring its own tail and that is used especially to represent the eternal cycle of destruction and rebirth

I’m going to use Microsoft’s DALL-E 3-based Copilot Designer because it’s free for anyone to take a crack at. Let the fun begin:

…None of those pictures are of an ouroboros. They all just LOOK LIKE ouroboros. None of them are devouring their own tail.

Okay, let’s “cheat” (yes, this is already cheating) by straight up TELLING it to draw a "snake swallowing its own tail":

Uh, it did a bit worse.

How about "snake biting its own tail?"

…Not even close now.

Okay, let’s try "snake eating its own tail":

…Nope.

Someone from LinkedIn sent me this group of images from Midjourney, but these are still not pictures of ouroboros. Note the misshapened “snakelets”:

Finally, another person sent me this:

It was made with this prompt:

Create an image where snakes body creates a clean ring, empty on the inside, only the outline is made of the snake body.

Imagine a ring, where the outline is the snake body. The head of the snake joins the tail. The head is swallowing the tail.

Wow. We’d have to cheat and cheat hard. The thing is, now it's no longer dealing with the concept of ouroboros. I’d say that's the point... Generative AI just flat out can't deal with concepts so it basically has to be STRONG-ARMED into producing patterns via a mechanical and non-conceptual manner.

That’s not telling it to draw an ouroboros; That’s telling it to contort itself with a snake-like pattern until it’s bent into the form we understand as “ouroboros.”

We understand what things are. Machines “understand” jack squat and that’s the way they’ll always be. If you want an in-depth explanation as to exactly why, see my article about it here.

Describing something that an artist is unfamiliar with and providing reference images is standard practice for commissioning art.

This doesn't mean that artists lack comprehension of "concepts", it means that you have to describe things in ways that people understand.

While these AIs don't actually understand anything, this is irrelevant to why they are useful.